I found a site called MapJack via this post at Mapperz last week, but haven't seen much other comment on it. They provide a similar "immersive photographic" view of the world to Google Street View, but they include data from some locations where you can only walk (not drive), and some that are indoors - including a tour of Alcatraz. Currently they just have a beta site with coverage for San Francisco. The user interface incorporates some very nice ideas.

One of most interesting aspects of this for me is in the "About MapJack" page, where they say:

Mapjack.com showcases a new level of mapping technology. What others have done with NASA budgets and Star Wars-like equipment, we've done on a shoestring budget, along with a few trips to Radio Shack. Specifically, we developed an array of proprietary electronics, hardware and software tools that enable us to capture an entire city’s streets with relative ease and excellent image quality. We have a complete low-cost scalable system encompassing the entire work-flow process needed for Immersive Street-Side Imagery, from picture gathering to post-processing to assembling on a Website.

This is just another example of people finding ways to bring down the cost of relatively specialized and expensive data capture tasks - it made me think of this post on aerial photography by Ed Parsons.

Tuesday, July 31, 2007

GeoWeb report - part 1

Well, I made it back from the big road trip up to Vancouver for the GeoWeb conference. We got back home on Sunday evening, after a total of 3439 miles, going up through Wyoming and Montana and then across the Canadian Rockies through Canmore, Banff, Jasper and Whistler, and coming back in more of a straight line, through Washington, Oregon, Idaho and Utah. The Prius did very well, performing better at high speeds than I had expected.

But anyway, the GeoWeb conference was very good. The venue was excellent, especially the room where the plenary sessions were held, which was "in the round", with microphones, power and network connections for all attendees (it felt a bit like being at the United Nations). This was very good for encouraging audience interaction, even with a fairly large group. See the picture below of the closing panel featuring Michael Jones of Google, Vincent Tao of Microsoft, Ron Lake of Galdos, and me (of no fixed abode).

I will do a couple more posts as I work through my notes, but here are a few of the highlights. In his introductory comments, Ron Lake said that in past years the focus of the conference had primarily been on what the web could bring to "geo", but that now we were also seeing increasing discussion on what "geo" can bring to the web - I thought that this was a good and succinct observation.

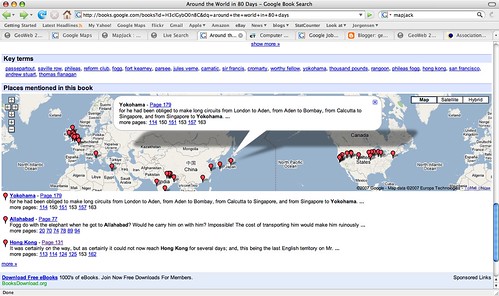

Perhaps one of the best examples of the latter was given by Michael Jones in his keynote, where he showed a very interesting example from Google book search, which I hadn't come across before. If you do a book search for Around the World in 80 Days, and scroll down to the bottom of the screen, you will see a map with markers showing all the places mentioned in the book. When you click on a marker, you get a list of of the pages where this place is mentioned and in some cases can click through to that page.

This adds a powerful spatial dimension to a traditional text-based document. It is not much of a jump to think about incorporating this spatial dimension into the book search capability, and if you can do this on books, why not all documents indexed by Google? Michael said that he expected to see the "modality" of spatial browsing grow significantly in the next year, and he was originally going to show us a different non-public example in regard to this topic, but he couldn't as he had a problem connecting to the Google VPN. My interpretation of all this is that I think we will see some announcements from Google in the not too distant future that combine their traditional search with geospatial capabilities (of course people like MetaCarta have been doing similar things for a while, but as we have seen with Earth and Maps, if Google does it then things take on a whole new dimension).

Another item of interest that Michael mentioned is that Google is close to reaching an arrangement with the BC (British Columbia) government to publish a variety of their geospatial data via Google Earth and Maps. This was covered in an article in the Vancouver Sun, which has been referenced by various other blogs in the past couple of days (including AnyGeo, The Map Room, and All Points Blog). This could be a very significant development if other government agencies follow suit, which would make a lot of sense - it's a great way for government entities to serve their citizens, by making their data easily available through Google (or Microsoft, or whoever - this is not an exclusive arrangement with Google). There are a few other interesting things Michael mentioned which I'll save for another post.

One other theme which came up quite a lot during the conference was "traditional" geospatial data creation and update versus "user generated" data ("the crowd", "Web 2.0", etc). Several times people commented that we had attendees from two different worlds at the conference, the traditional GIS world and the "neogeography" world, and although events like this are helping to bring the two somewhat closer together, people from the two worlds tend to have differing views on topics like data update. Google's move with BC is one interesting step in bringing these together. Ron Lake also gave a good presentation with some interesting ideas on data update processes which could accommodate elements of both worlds. Important concepts here included the notions of features and observations, and of custodians, observers and subscribers. I may return to this topic in a future post.

As anticipated given the speakers, there were some good keynotes. Vint Cerf, vice president and chief Internet evangelist for Google, and widely known as a "Father of the Internet", kicked things off with an interesting presentation which talked about key architectural principles which he felt had contributed to the success of the Internet, and some thoughts on how some of these might apply to the "GeoWeb" - though as he said, he hadn't had a chance to spend too much time looking specifically at the geospatial area. I will do a separate post on that.

He was followed by Jack Dangermond, who talked on his current theme of "The Geographic Approach" - his presentation was basically a subset of the one he did at the recent ESRI user conference. He was passionate and articulate as always about all that geospatial technology can do for the world. A difference in emphasis between him and speakers from "the other world" is in the focus on the role of "GIS" and "GIS professionals". I agree that there will continue to be a lot of specialized tasks that will need to be done by "GIS professionals" - but what many of the "old guard" still don't realize, or don't want to accept, is that the great majority of useful work that is done with geospatial data will be done by people who are not geospatial professionals and do not have access to "traditional GIS" software. To extend an analogy I've used before, most useful work with numerical data is not done by mathematicians. This is not scary or bad or a knock on mathematicians (I happen to be one by the way), but it does mean that as a society we can leverage the power of numerical information by orders of magnitude more than we could if only a small elite clique of "certified mathematical professionals" were allowed to work with numbers. Substitute "geographical" or "geospatial" as appropriate in this statement to translate this to the current situation in our industry.

For example, one slide in Jack's presentation has the title "GIS servers manage geographic data". This is a true statement, but much more important is that fact that we are now in a world where ANY server can manage geographic data - formats like geoRSS and KML enable this, together with the fact that all the major database management systems are providing support for spatial data. There is a widely stated "fact" that many people in the geospatial industry have quoted over the years, that something like 85% of data has a geospatial component (I have never seen a source for this claim though - has anyone else?). Whatever the actual number, it certainly seems reasonable to claim that "most" data has a spatial component. So does that mean that 85% of data needs to be stored in special "GIS servers"? Of course not, that is why it is so significant that we really are crossing the threshold to where geospatial data is just another data type, which can be handled by a wide range of information systems, so we can just add that spatial component into existing data where it currently is. Jack also continues to label Google and Microsoft as "consumer" systems when, as I've said before, they are clearly much more than that already, and their role in non-consumer applications will continue to increase rapidly.

But anyway, as Ron said in his introduction, it would be hard to get two better qualified people than Jack and Vint to talk about some of the key concepts of "geo" and "web", so it was an excellent opening session. I think that this post is more than long enough by this point, so I'll wrap it up here and save further ramblings for part 2!

But anyway, the GeoWeb conference was very good. The venue was excellent, especially the room where the plenary sessions were held, which was "in the round", with microphones, power and network connections for all attendees (it felt a bit like being at the United Nations). This was very good for encouraging audience interaction, even with a fairly large group. See the picture below of the closing panel featuring Michael Jones of Google, Vincent Tao of Microsoft, Ron Lake of Galdos, and me (of no fixed abode).

I will do a couple more posts as I work through my notes, but here are a few of the highlights. In his introductory comments, Ron Lake said that in past years the focus of the conference had primarily been on what the web could bring to "geo", but that now we were also seeing increasing discussion on what "geo" can bring to the web - I thought that this was a good and succinct observation.

Perhaps one of the best examples of the latter was given by Michael Jones in his keynote, where he showed a very interesting example from Google book search, which I hadn't come across before. If you do a book search for Around the World in 80 Days, and scroll down to the bottom of the screen, you will see a map with markers showing all the places mentioned in the book. When you click on a marker, you get a list of of the pages where this place is mentioned and in some cases can click through to that page.

This adds a powerful spatial dimension to a traditional text-based document. It is not much of a jump to think about incorporating this spatial dimension into the book search capability, and if you can do this on books, why not all documents indexed by Google? Michael said that he expected to see the "modality" of spatial browsing grow significantly in the next year, and he was originally going to show us a different non-public example in regard to this topic, but he couldn't as he had a problem connecting to the Google VPN. My interpretation of all this is that I think we will see some announcements from Google in the not too distant future that combine their traditional search with geospatial capabilities (of course people like MetaCarta have been doing similar things for a while, but as we have seen with Earth and Maps, if Google does it then things take on a whole new dimension).

Another item of interest that Michael mentioned is that Google is close to reaching an arrangement with the BC (British Columbia) government to publish a variety of their geospatial data via Google Earth and Maps. This was covered in an article in the Vancouver Sun, which has been referenced by various other blogs in the past couple of days (including AnyGeo, The Map Room, and All Points Blog). This could be a very significant development if other government agencies follow suit, which would make a lot of sense - it's a great way for government entities to serve their citizens, by making their data easily available through Google (or Microsoft, or whoever - this is not an exclusive arrangement with Google). There are a few other interesting things Michael mentioned which I'll save for another post.

One other theme which came up quite a lot during the conference was "traditional" geospatial data creation and update versus "user generated" data ("the crowd", "Web 2.0", etc). Several times people commented that we had attendees from two different worlds at the conference, the traditional GIS world and the "neogeography" world, and although events like this are helping to bring the two somewhat closer together, people from the two worlds tend to have differing views on topics like data update. Google's move with BC is one interesting step in bringing these together. Ron Lake also gave a good presentation with some interesting ideas on data update processes which could accommodate elements of both worlds. Important concepts here included the notions of features and observations, and of custodians, observers and subscribers. I may return to this topic in a future post.

As anticipated given the speakers, there were some good keynotes. Vint Cerf, vice president and chief Internet evangelist for Google, and widely known as a "Father of the Internet", kicked things off with an interesting presentation which talked about key architectural principles which he felt had contributed to the success of the Internet, and some thoughts on how some of these might apply to the "GeoWeb" - though as he said, he hadn't had a chance to spend too much time looking specifically at the geospatial area. I will do a separate post on that.

He was followed by Jack Dangermond, who talked on his current theme of "The Geographic Approach" - his presentation was basically a subset of the one he did at the recent ESRI user conference. He was passionate and articulate as always about all that geospatial technology can do for the world. A difference in emphasis between him and speakers from "the other world" is in the focus on the role of "GIS" and "GIS professionals". I agree that there will continue to be a lot of specialized tasks that will need to be done by "GIS professionals" - but what many of the "old guard" still don't realize, or don't want to accept, is that the great majority of useful work that is done with geospatial data will be done by people who are not geospatial professionals and do not have access to "traditional GIS" software. To extend an analogy I've used before, most useful work with numerical data is not done by mathematicians. This is not scary or bad or a knock on mathematicians (I happen to be one by the way), but it does mean that as a society we can leverage the power of numerical information by orders of magnitude more than we could if only a small elite clique of "certified mathematical professionals" were allowed to work with numbers. Substitute "geographical" or "geospatial" as appropriate in this statement to translate this to the current situation in our industry.

For example, one slide in Jack's presentation has the title "GIS servers manage geographic data". This is a true statement, but much more important is that fact that we are now in a world where ANY server can manage geographic data - formats like geoRSS and KML enable this, together with the fact that all the major database management systems are providing support for spatial data. There is a widely stated "fact" that many people in the geospatial industry have quoted over the years, that something like 85% of data has a geospatial component (I have never seen a source for this claim though - has anyone else?). Whatever the actual number, it certainly seems reasonable to claim that "most" data has a spatial component. So does that mean that 85% of data needs to be stored in special "GIS servers"? Of course not, that is why it is so significant that we really are crossing the threshold to where geospatial data is just another data type, which can be handled by a wide range of information systems, so we can just add that spatial component into existing data where it currently is. Jack also continues to label Google and Microsoft as "consumer" systems when, as I've said before, they are clearly much more than that already, and their role in non-consumer applications will continue to increase rapidly.

But anyway, as Ron said in his introduction, it would be hard to get two better qualified people than Jack and Vint to talk about some of the key concepts of "geo" and "web", so it was an excellent opening session. I think that this post is more than long enough by this point, so I'll wrap it up here and save further ramblings for part 2!

Labels:

conference,

ESRI,

geospatial,

google,

maps,

Microsoft

Thursday, July 26, 2007

Microsoft Virtual Earth to support KML

I'm at the GeoWeb conference in Vancouver which has been good so far - I will be posting more when I get time, but it's been hectic so far. However, I just thought I would do a quick post to say that in the Microsoft "vendor spotlight" presentation which just finished, the speaker said that Virtual Earth will support the ability to display KML in a September / October release this year. Maybe I missed something, but I hadn't seen this news elsewhere. I just did a quick Google and this post at Digital Earth Blog says that at Where 2.0 in June they wouldn't comment on support for KML, and I didn't find any other confirmation online of the statement that was made here today, which makes me wonder whether this comment was "officially blessed". Has anyone else heard this from other sources?

This would make a huge amount of sense of course, given the amount of data which is being made available in KML, but nevertheless Microsoft does have something of a track record of trying to impose their own standards :), and they have been reluctant to commit to KML up to this point, so I think this is a very welcome announcement (assuming it's correct), which can only cement KML's position as a de facto standard (I don't think Microsoft could have stopped KML's momentum, but if they had released a competing format it would have been an unfortunate distraction).

This would make a huge amount of sense of course, given the amount of data which is being made available in KML, but nevertheless Microsoft does have something of a track record of trying to impose their own standards :), and they have been reluctant to commit to KML up to this point, so I think this is a very welcome announcement (assuming it's correct), which can only cement KML's position as a de facto standard (I don't think Microsoft could have stopped KML's momentum, but if they had released a competing format it would have been an unfortunate distraction).

Tuesday, July 24, 2007

Sad news about Larry Engelken

On Friday I heard the very sad news that Larry Engelken had been killed in a jet-skiing accident while on vacation in Montana. He was 58. Larry was a founder of Convergent Group (originally UGC), a past president of GITA, and a great person. The Denver Post has an obituary here. My deepest condolences go out to Larry's family.

Friday, July 20, 2007

GeoWeb panel update

As I mentioned previously, I am going to be at the GeoWeb conference in Vancouver next week, and I will now be participating in the closing panel entitled "Future Shock: The GeoWeb Forecast for 2012", together with Ron Lake of Galdos, Michael Jones of Google, Carl Reed of OGC, and Vincent Tao of Microsoft. The abstract is as follows:

This closing panel session features senior visionaries who provide a synthetic take of GeoWeb 2007 and use this as a basis for forecasting the growth, evolution, and direction of the GeoWeb. Specifically, discussants will address:

What will it look like in 2012?

What device(s) will predominate?

What will be the greatest innovation?

What will be the largest impediment?

What market segments will it dominate?

What market segments will it fail to impact?

Each discussant will provide a five- to seven-minute statement touching on each of the questions above. A 30-minute question-and-answer session will follow. Answers will be limited to two minutes; each discussant has the opportunity to respond to each question.

Since I leave tomorrow morning to drive up there, I will have something to think about on the road! It should be a good panel I think.

This closing panel session features senior visionaries who provide a synthetic take of GeoWeb 2007 and use this as a basis for forecasting the growth, evolution, and direction of the GeoWeb. Specifically, discussants will address:

What will it look like in 2012?

What device(s) will predominate?

What will be the greatest innovation?

What will be the largest impediment?

What market segments will it dominate?

What market segments will it fail to impact?

Each discussant will provide a five- to seven-minute statement touching on each of the questions above. A 30-minute question-and-answer session will follow. Answers will be limited to two minutes; each discussant has the opportunity to respond to each question.

Since I leave tomorrow morning to drive up there, I will have something to think about on the road! It should be a good panel I think.

Tuesday, July 17, 2007

Back from Mexico - some experiences with geotagging

Well hello again, I am back from Mexico and had a great time. I managed to avoid blogging, the following was a more typical activity :)

This was my first vacation where I managed to reliably geotag all my photos - previously I had made a few half-hearted attempts but had failed to charge enough batteries to keep my GPS going consistently, or hadn't taken it everywhere. I used HoudahGeo to geotag the photos on my Mac, and this seemed to work fine, with a couple of minor complaints. One is that when it writes lat-long data to the EXIF metadata in the original images, for some reason iPhoto doesn't realize this, and doesn't show the lat-long when you ask for info on the photo (unless you export and re-import all the photos, in which case it does). The other is that most operations are fast, but for some reason writing the EXIF data to the photos, the final step in the workflow I use, is very slow - it would often take several minutes to do this for several hundred 10 megapixel images (a typical day's shooting for me), when the previous operations had taken a few seconds. But it did the job and all my photos have the lat-long where they were taken safely tucked away in their EXIF metadata.

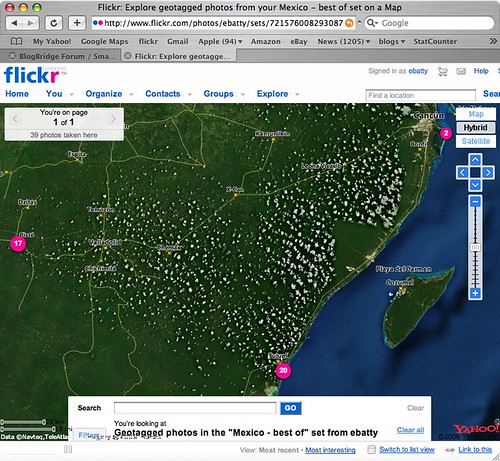

Flickr provides its own map viewing capability, which uses Yahoo! maps (not surprisingly since Yahoo! owns flickr). If you click here, you can see the live version of the map which is also shown in the following screen shot, showing the locations of my "top 40" pictures from the trip.

If you zoom in to the northeast location, there is some high quality imagery, in Cancun, which gives a good basis for seeing where the photos were taken. However, the imagery is very poor in the two main locations where we stayed, Tulum (to the south) and Chichen Itza (to the west). I looked in Google Earth and Google Maps, and they both had much better imagery in Tulum, though unfortunately not in Chichen Itza. Microsoft didn't have good imagery for either location. So I thought I should poke around a little more to explore the latest options for displaying geotagged photos from flickr in Google Earth or Google Maps.

Flickr actually has some nice native support now for generating feeds of geotagged photos in either KML or GeoRSS. So this link dynamically generates a KML file of my photos with the tags mexico and best (though both Safari and FireFox don't seem to be able to recognize this as a KML file automatically, I have to tell them to use Google Earth and then it works fine). Unfortunately though, flickr feeds seem to return a maximum of 20 photos, and I haven't found any way around this. I can work around this by creating separate feeds for the best photos from Tulum, Chichen Itza and Cancun separately, but obviously that's not a good solution in all cases. These KML files work well in Google Earth, and one nice feature is that they include thumbnail versions of each photo which are directly displayed on the map (and when you click on those, you get a larger version displayed and the option to click again and display a full size photo back at flickr). However, the approach of using thumbnails does obscure the map more than if you use pins or other symbols to show the location of the photo - either approach may be preferred depending on the situation. These files don't display especially well in Google Maps - you get the icon, but the info window doesn't include the larger image or the links back to flickr - here's one example.

I looked around a little more and found this post at Ogle Earth which pointed me to this Yahoo Pipe which can be used to create a KML file from flickr. After a bit more messing around (you have to find things like your internal flickr id, which is non-obvious), I managed to produce this KML file which contains all of my "top 40" photos in a single file (you may need to right click the link and save the KML file, then open it in Google Earth). Of course I also needed to upload this file to somewhere accessible on the web, so all in all this involved quite a few steps. This KML file uses pins displayed on the map (with photo titles), rather than thumbnails, and again the info window displays a small version of the photo with an option to click a link back to flickr for larger versions. This KML also includes time stamps, which is interesting - if you are using Google Earth 4, you will see a "timer" bar at the top of the window when you select this layer. To see all the images, make sure you have the whole time window selected (at first this was not the case for me, so it seemed that some of the photos were missing). But if you select a smaller window, you can do an animation to show where the pictures were taken over time, which is also pretty cool.

So in general conclusion, the tools to easily geotag your photos are pretty solid now - I have used both HoudahGeo on the Mac, and previously RoboGeo on the PC, and both worked well. The software available to display geotagged photos is getting better, but there's still room for improvement - but I'm sure things will continue to move along quickly in this area. I would like to see flickr add a "KML" button to their map displays, which would be much simpler than the current process!

This was my first vacation where I managed to reliably geotag all my photos - previously I had made a few half-hearted attempts but had failed to charge enough batteries to keep my GPS going consistently, or hadn't taken it everywhere. I used HoudahGeo to geotag the photos on my Mac, and this seemed to work fine, with a couple of minor complaints. One is that when it writes lat-long data to the EXIF metadata in the original images, for some reason iPhoto doesn't realize this, and doesn't show the lat-long when you ask for info on the photo (unless you export and re-import all the photos, in which case it does). The other is that most operations are fast, but for some reason writing the EXIF data to the photos, the final step in the workflow I use, is very slow - it would often take several minutes to do this for several hundred 10 megapixel images (a typical day's shooting for me), when the previous operations had taken a few seconds. But it did the job and all my photos have the lat-long where they were taken safely tucked away in their EXIF metadata.

Flickr provides its own map viewing capability, which uses Yahoo! maps (not surprisingly since Yahoo! owns flickr). If you click here, you can see the live version of the map which is also shown in the following screen shot, showing the locations of my "top 40" pictures from the trip.

If you zoom in to the northeast location, there is some high quality imagery, in Cancun, which gives a good basis for seeing where the photos were taken. However, the imagery is very poor in the two main locations where we stayed, Tulum (to the south) and Chichen Itza (to the west). I looked in Google Earth and Google Maps, and they both had much better imagery in Tulum, though unfortunately not in Chichen Itza. Microsoft didn't have good imagery for either location. So I thought I should poke around a little more to explore the latest options for displaying geotagged photos from flickr in Google Earth or Google Maps.

Flickr actually has some nice native support now for generating feeds of geotagged photos in either KML or GeoRSS. So this link dynamically generates a KML file of my photos with the tags mexico and best (though both Safari and FireFox don't seem to be able to recognize this as a KML file automatically, I have to tell them to use Google Earth and then it works fine). Unfortunately though, flickr feeds seem to return a maximum of 20 photos, and I haven't found any way around this. I can work around this by creating separate feeds for the best photos from Tulum, Chichen Itza and Cancun separately, but obviously that's not a good solution in all cases. These KML files work well in Google Earth, and one nice feature is that they include thumbnail versions of each photo which are directly displayed on the map (and when you click on those, you get a larger version displayed and the option to click again and display a full size photo back at flickr). However, the approach of using thumbnails does obscure the map more than if you use pins or other symbols to show the location of the photo - either approach may be preferred depending on the situation. These files don't display especially well in Google Maps - you get the icon, but the info window doesn't include the larger image or the links back to flickr - here's one example.

I looked around a little more and found this post at Ogle Earth which pointed me to this Yahoo Pipe which can be used to create a KML file from flickr. After a bit more messing around (you have to find things like your internal flickr id, which is non-obvious), I managed to produce this KML file which contains all of my "top 40" photos in a single file (you may need to right click the link and save the KML file, then open it in Google Earth). Of course I also needed to upload this file to somewhere accessible on the web, so all in all this involved quite a few steps. This KML file uses pins displayed on the map (with photo titles), rather than thumbnails, and again the info window displays a small version of the photo with an option to click a link back to flickr for larger versions. This KML also includes time stamps, which is interesting - if you are using Google Earth 4, you will see a "timer" bar at the top of the window when you select this layer. To see all the images, make sure you have the whole time window selected (at first this was not the case for me, so it seemed that some of the photos were missing). But if you select a smaller window, you can do an animation to show where the pictures were taken over time, which is also pretty cool.

So in general conclusion, the tools to easily geotag your photos are pretty solid now - I have used both HoudahGeo on the Mac, and previously RoboGeo on the PC, and both worked well. The software available to display geotagged photos is getting better, but there's still room for improvement - but I'm sure things will continue to move along quickly in this area. I would like to see flickr add a "KML" button to their map displays, which would be much simpler than the current process!

Labels:

flickr,

geospatial,

geotagging,

google,

gps,

yahoo

Thursday, July 5, 2007

Off on vacation for a week

I'm off to Mexico on vacation for a week from tomorrow morning, so don't expect too much blogging! We're staying in a cabana by the ocean with no electricity for four days, though they say they have a wireless network connection - not sure if that is good or bad :). If they really do then I may have to do one post from the outdoor bathtub before the iPhone batteries expire, just because I can ... but in general things will be quiet for a week or so! However, I did just buy myself a new Garmin 60CSx handheld GPS (since I still can't get any software I'm happy with to save tracklogs on the BlackBerry), and plan to test out the HoudaGeo application I just bought for the Mac to geocode all my photos (we will be going to the Mayan pyramids at Chichen Izta too, which I'm really looking forward to). I did a quick test of the HoudaGeo application in Denver this afternoon and it seemed to work fine (I had previously used RoboGeo on the PC, but that doesn't work on the Mac).

However, I did just buy myself a new Garmin 60CSx handheld GPS (since I still can't get any software I'm happy with to save tracklogs on the BlackBerry), and plan to test out the HoudaGeo application I just bought for the Mac to geocode all my photos (we will be going to the Mayan pyramids at Chichen Izta too, which I'm really looking forward to). I did a quick test of the HoudaGeo application in Denver this afternoon and it seemed to work fine (I had previously used RoboGeo on the PC, but that doesn't work on the Mac).

Cheers for now :).

However, I did just buy myself a new Garmin 60CSx handheld GPS (since I still can't get any software I'm happy with to save tracklogs on the BlackBerry), and plan to test out the HoudaGeo application I just bought for the Mac to geocode all my photos (we will be going to the Mayan pyramids at Chichen Izta too, which I'm really looking forward to). I did a quick test of the HoudaGeo application in Denver this afternoon and it seemed to work fine (I had previously used RoboGeo on the PC, but that doesn't work on the Mac).

However, I did just buy myself a new Garmin 60CSx handheld GPS (since I still can't get any software I'm happy with to save tracklogs on the BlackBerry), and plan to test out the HoudaGeo application I just bought for the Mac to geocode all my photos (we will be going to the Mayan pyramids at Chichen Izta too, which I'm really looking forward to). I did a quick test of the HoudaGeo application in Denver this afternoon and it seemed to work fine (I had previously used RoboGeo on the PC, but that doesn't work on the Mac).Cheers for now :).

Subscribe to:

Posts (Atom)