Saturday, December 29, 2007

Location awareness for iPhone coming soon?

The report at GearLive also says that the new release of iPhone Google Maps will support the hybrid display mode (it currently just provides "Map" and "Satellite" displays), and the new Options screen that they show also adds a new "Drop pin" function, presumably for visually marking a location on the map.

So one way or another, it looks as though hopefully the location determination options for us iPhone owners will be improving soon!

Tuesday, December 18, 2007

Where the fugawi?

Sunday, December 9, 2007

Keynote address at FME Worldwide User Conference (post number 100!)

I have known the co-founders of Safe, Don Murray and Dale Lutz (pictured here in the Safe Insider Newsletter), since the very early days of Smallworld. They founded the company in 1993, which was the same year that we began in North America with Smallworld. We had an early mutual customer in electric utility BC Hydro, and I remember being introduced to them when Safe was still relatively unknown, by Marv Everett, who ran the transmission system GIS at BC Hydro back then (who incidentally is now retired and living in Campbell River on Vancouver Island, and enjoying spending time on his boat - see the picture below).

The BC government provided data back then in a format called SAIF, which BC Hydro needed to use, and Safe Software were the experts in this (which is where the company name came from). Marv was a big advocate of Safe, and they became a key business partner for Smallworld across our whole customer base after that initial BC Hydro project. And of course Safe works with all the major GIS vendors, so that partnership continued through my time at Intergraph.

Anyway, enough reminiscing ... I am very pleased to see how successful Don and Dale have been with Safe since then, and look forward to attending the FME conference, and spending some time in Vancouver which is one of my favorite cities.

Oh, I almost forgot (this ended up not being such a short post after all) - this is my 100th post since I began blogging on April 20th this year. It's been fun so far!

Friday, December 7, 2007

When navigation systems are superfluous

Neo-pointless question of the moment: is this thing "GIS"?

If you work in an organization that uses location data, or you are developing applications in this area, you just need to look at the tools which can help you work with that data and choose the best tools for the job, based on various criteria including functionality, cost, risk, etc. You shouldn't care what labels people may or may not attach to the various systems. There continues to be very rich functionality in the established systems which has been developed over decades, and the new generation of systems is not going to replace all of that any time soon. But probably 95% of the users of geospatial data use 5% of that functionality, and they are all very strong candidates for leveraging the newer generation of systems. If you work for an established "GIS" provider, you need to be working out how to leverage the new generation of systems in your solutions (be they from Google or Microsoft or open source or wherever), and figuring out how you continue to add value in this expanded ecosystem. Trying to fight the new systems head on, or dismiss them as not relevant to what you do, will not be a successful approach. But it is not a case of either "GIS" or "neogeography" (whatever both those things are), it is a case of mixing and matching the pieces that are important for your particular application. And as I said previously, I believe that the proportion of pieces which come from the "new generation" of systems (including open source) will increase rapidly.

Tuesday, December 4, 2007

No data creation in neogeography - errr????

King Canute trying to turn back the tide

King Canute trying to turn back the tideMonday, December 3, 2007

Oxford University using OpenStreetMap data

Here's a screen shot from the Open Street Map version (for live version click here and zoom in):

Note all the footpaths and alleyways, of which Oxford has a lot. On the west side it includes a footpath along the canal and on the east side it shows an all important very narrow alleyway off New College Lane which leads to a great old pub called the Turf Tavern, which is easily overlooked. It also correctly shows that Broad Street is no longer a through street, which is a relatively recent change (some time in the last few years, not sure exactly when). None of these details are shown on the following Google map (for live version click here):

OpenStreetMap is a free editable map of the whole world. It is made by people like you.

OpenStreetMap allows you to view, edit and use geographical data in a collaborative way from anywhere on Earth.

So it's essentially a "crowdsourcing" approach to geospatial data collection. There was a lot of interest in OpenStreetMap at the FOSS4G conference this year. I had been impressed with everything I had seen about the project, but I have to confess that I had been thinking of it mainly as a "cheap and cheerful" (cheap=free) alternative to other more expensive but higher quality data sources. It is interesting to see that it has already moved past that in some locations (though obviously not all) to where it is more comprehensive and more up to date than data from commercial sources - this is just a taste of things to come in this regard, I am sure.

Wednesday, November 21, 2007

Buy your "geo gifts" here!

Tuesday, November 20, 2007

First impressions: quick review of the Amazon Kindle

I read a lot online. I almost never buy paper newspapers and rarely watch TV - I get most of my news online. I read a lot of blogs. Both of these activities - reading news and blogs - tend to be non-sequential. You skim over headlines, read the stories that interest you, and skip those that don't. When online (as opposed to reading paper newspapers), there are often links in one story that lead you to another. Standard web browsers are a good environment for this - you can fit plenty of information on the screen, it's fast and efficient to move your mouse to select links, the pages can include color and graphics. The Kindle can let you read news and blogs, but it's clunky for this. I could potentially see myself using it occasionally for this if I was traveling and didn't have other options, though even then I would probably prefer the browser on my iPhone in most circumstances - it does have color and graphics, and I can more quickly select links on the touch screen. But in general I would use my laptop whenever possible for these things. The same is true of magazines on the Kindle, only more so - I think that here you lose more by not having color graphics. In general I read more offline magazines than newspapers, as I think it's harder to recreate the full magazine experience online.

For reading my own work or personal documents, again in most cases I think that the Kindle will not be the right choice. In most cases when I am reading a document like this, I may want to take notes, make edits, email things to other people. Again my laptop is really the right tool for this job. If I have some documents that I may just want to refer back to then it's nice that I can download those to the Kindle, but I don't see that being a primary use. On that note, I saw before I bought it that it costs ten cents to convert a document and deliver it wirelessly to your Kindle - I found out after buying it that there is an option to convert it for free and download it to your computer, then transfer it via a USB connection. Though to be honest unless I was really transferring lots of documents, I'd probably pay the ten cents most of the time to save the hassle of doing the manual transfer (not a huge deal, but you will spend several minutes to do that).

So anyway, on to the books, the real reason for having this. Despite all my other online activities, I have never read a whole book online. I have had Safari subscriptions for technical books, where you jump in and out, search for specific items, and read sections at a time, but you typically don't sit there and read the thing from end to end. With the Kindle I think I will be quite happy to read books from beginning to end. The "electronic paper" display really is very easy on the eyes. And all you have to do to flip to the next page is press on the large bar which runs all the way down the right hand side of the Kindle (there's a smaller one on the left too), which you can do without thinking at all. This really just lets you get immersed in the reading more than in most online environments where you might have to use the mouse or even just the page down button to scroll to a new screen or page.

The form factor is nice - it's very light and compact, but the screen is large enough to read comfortably. The design could be slicker, but I like it better in person than in the pictures. It comes with a nice leather book-like folding case. And when you're reading on it you really are just focused on the screen - Jeff Bezos talks about making the technology "disappear" so you can just read, and I think they have been successful in doing that.

And I like the browsing and buying experience, which you can do either on the Kindle, or on Amazon in your regular browser. You can download the first chapter of a book (on everything I've looked at so far, at least) which is nice for sampling before you commit to buying. Prices relative to hard copy books vary significantly. For technical books there is no difference in most cases (which is a shame!). For those I think that O'Reilly's Safari continues to be a good option. Current best-sellers and new books are mainly priced at $10, which is often $10-20 less than the corresponding paper book. And there seem to be some good deals on "classics" - I have bought 1984 for $3.75, versus the cheapest paper version on Amazon being $10, and Tess of the d'Urbervilles for $2.39, both of which had been on my list of things to re-read sometime. So while the initial cost of $400 is pretty high and will clearly need to come down quite a bit before any widespread adoption, the net cost if you read tens of books a year is actually a lot less.

I think that the form factor is really good for travel. I like to travel light, and if I throw 3 or 4 books in my bag I really feel the difference. The Kindle is very lightweight (lighter than a single paperback book), so I really like that aspect.

In conclusion, I think it's highly likely that having the Kindle will result in me reading more books than I did before, especially when I'm traveling (though I'm not doing that much these days). But I think that a combination of the ease of the buying process, the good prices (in many cases), and the convenience (and novelty factor, at least initially) of the device itself will encourage me to read more. But only time will tell whether this really will be the case or not ... I'll report back in a little while!

Monday, November 19, 2007

Amazon Kindle electronic book

Sunday, November 18, 2007

Startup diary - 7 weeks in

Friday, November 2, 2007

Never a dull moment in the Social Networking space

And then yesterday, there was a major announcement from Google about a new open platform called Open Social, which is as the name suggests a new open approach to social networking. As Techcrunch puts it, "within just the last couple of days, the entire social networking world has announced that they are ganging up to take on Facebook, and Google is their Quarterback in the big game". The impressive list of partners involved includes LinkedIn, MySpace, Plaxo and - interestingly - salesforce.com and Oracle, who are (presumably) looking to applying some of the principles of social networking in a business environment. We had been thinking about potential applications for our system with salesforce.com before this announcement.

So this all seems like good news for us, in terms of enabling us to integrate the location related functionality we're developing (focused on Facebook initially) with multiple systems using one standard approach.

Wednesday, October 24, 2007

Startup diary - 3.5 weeks in

I have founded one startup before, Ten Sails (now Ubisense), but this one feels very different. At Ten Sails we had four co-founders - myself, Richard Green, Martin Cartwright and David Theriault. Richard and David were both founders of Smallworld before that, so they had been through it before. Richard was the CEO and, while we all had input, he was really driving the company direction. And Martin is an experienced CFO and took care of all the finances, as well as contractual and legal issues - all the sort of things I was happy to ignore as CTO! But this time, according to the official formation documents, I am company President, Chief Executive Officer, Treasurer and Secretary, so there's no hiding from any of that stuff!

Another big difference is that this time we have a very clear idea for a product and have launched immediately into product development, which wasn't the case with Ten Sails, where we spent some time exploring various ideas after we had formed the company. We're in a space, social networking (and location), where there is a huge amount of activity going on, and so far we haven't seen anyone else doing what we plan to do - but that puts a real feeling of urgency into getting our product out as soon as possible, before someone else gets there.

Marc Andreessen says:

First, and most importantly, realize that a startup puts you on an emotional rollercoaster unlike anything you have ever experienced.

You will flip rapidly from a day in which you are euphorically convinced you are going to own the world, to a day in which doom seems only weeks away and you feel completely ruined, and back again.

Over and over and over.

And I'm talking about what happens to stable entrepreneurs.

I'd put myself in the pretty stable category, but there's definitely a strong element of this. I feel very confident that we're going to build a compelling application and have a great chance of getting millions of users ... but then I get rather nervous whenever I'm listening to a conference presentation or reading a blog about interesting new location-related startups, in case I come across someone doing the same thing ... but that hasn't happened so far :).

As I have mentioned in passing, I have two contract developers working for me, who are both doing a great job, Glen Marchesani and Nate Irwin. The first week we spent working around the dining table in my loft (not quite the traditional garage, but I really don't have a garage, just a spot in an underground parking lot which wouldn't work so well :) )...

... but now we've graduated to a cool and funky little office space inside Denver Union Station, which has a good startup feel to it ...

It's about a 100 yard walk from the door of my loft building to the door of Union Station, so that's a little more convenient than the 8 hour, 2 flight, 1000+ mile trip I used to have to get to my Intergraph office in Huntsville.

On the technical front, we have spent a little more time on installation and setup of our environments than I had hoped, but nevertheless we've made good progress on our design and initial development work. We had initially been looking at using Ruby on Rails, but ended up deciding to use Java on the middle tier. The biggest single factor in the decision was Glen's extensive experience and skills with Java, but there were a number of minor technical considerations too. We're still planning to use PostGIS as our back end database, though we're also looking at building a custom file-based caching mechanism for certain aspects of the application. We're currently using Media Temple as our hosting provider, but are thinking seriously about using Amazon EC2 and S3 when we roll out, especially now that Amazon has added new "extra large" servers with 15 GB of memory, 8 "EC2 Compute Units" (4 virtual cores with 2 EC2 Compute Units each), and 1690 GB of instance storage, based on a 64-bit platform - these servers should work well for serious database processing. These cost 80c per hour, excluding network traffic and external storage. A really attractive aspect of this "utility" approach to computing is that we can fire up a number of very high end machines like this to do high volume performance testing up front, but just pay for the time we are using them, and then scale back to a smaller configuration (with correspondingly lower cost) in the knowledge that we can scale up as necessary as we grow.

We will also be leveraging the Facebook Platform heavily in our first release, and I'll talk more about that in a future post. One of the exciting - and slightly scary - things about this is that there are several examples of Facebook applications growing to a million plus users within a month of launching, which is hugely faster growth than you would expect with traditional old school Internet applications back in 2006 :). And our back end processing needs are much heavier than most Facebook applications, so we have to put some serious effort into scalability. Obviously there's no guarantee we will grow anywhere near that fast, but we have to plan for that eventuality.

Between the ability of Amazon to allow us to scale up hardware on demand, and the ability of Facebook to deliver huge numbers of users and provide a good amount of core application infrastructure to us, I think it's pretty interesting that we can be seriously contemplating the possibility that we could have millions of users running a very sophisticated application within six months or so, and yet still have only a handful of people in the company. Or we might not of course, that's the emotional roller coaster part :).

Tuesday, October 16, 2007

Microsoft Virtual Earth now supports KML

Sunday, October 14, 2007

Name a country which begins with U

Nice little feature on iPhone Google Maps

Friday, October 5, 2007

Google Maps changes geocoding limits

Tuesday, October 2, 2007

Intriguing approach to image resizing

Someone else has produced an online demo based on the idea, and the paper mentioned is available at the site of co-author Ariel Shamir.

Monday, October 1, 2007

Spatial Networking is under way

Wednesday, September 26, 2007

Review of FOSS4G

One specific aim for me in coming here was to learn more about PostGIS, which I regarded beforehand as the front runner for the database technology to use for my new company Spatial Networking. Paul Ramsey, who was a busy man this week, giving a number of very good presentations, presented an interesting set of PostGIS case studies. These included IGN, the French national mapping agency, who maintain a database of 100 million geographic features, with frequent updates from 1800 users, and a fleet management company which stores GPS readings from 100 vehicles 10 times a minute for 8 hours a day, or 480,000 records a day. In a separate presentation, Alejandro Chumaceiro of SIGIS from Venezuela, talked about a similar fleet management application with very high update volumes. Interestingly, they use partitioned tables and create a new partition every hour! Incidentally, I talked with Alejandro afterwards and it turns out that he worked for IBM on their GFIS product from 1986 to 1991, and knew me from those days - it's a small world in the geospatial community :). Kevin Neufeld from Refractions Research also gave a lot of useful hints about partitioning and other performance related topics. Brian Timoney talked about the work he has done using both Google Earth and Virtual Earth on the front end, with PostGIS on the back end doing a variety of spatial queries and reports, including capabilities like buffering, in a way which is very easy to use for people with no specialized knowledge. And Tyler Erickson of Michigan Tech Research Institute talked about some interesting spatio-temporal analysis of environmental data he is doing using PostGIS, GeoServer and Google Earth. Overall I was very impressed with the capabilities and scalability of PostGIS, and was reassured that this is the right approach for us to use at Spatial Networking.

Another topic which featured in several sessions I attended was that of data. As Schuyler Erle said in introducing a session about the OSGeo Public Geodata Committee, a key difference between geospatial software and other open source initiatives is that the software is no use without data, so looking at ways to create and maintain, share, and enable users to find, freely available geodata is also an important element of OSGeo's work, in addition to software. Nick Black, a fellow Brit, gave a good talk about Open Street Map, which is getting a lot of interest. The scope of what they are doing is broader than I had realized, including not just streets but points of interest (pubs are apparently especially well represented!), address information which can be used for geocoding, and they are working on building up a US database based on TIGER data. The ubiquitous Geoff Zeiss, a man without whom no GIS conference would be complete :), gave an interesting review of the wide variety of government policies with regard to geospatial data around the world. One curious snippet from that was that in Malaysia and some other Asian countries, you need to have an application reviewed by the police and the army before being able to receive a government-issued map! In the opening session, I enjoyed the talk by Andrew Turner of Mapufacture on Neogeography Data Collection, which was a great overview of the wide range of approaches being used for "community generated" data, including things like cheap aerial photography using remote control drones from Pict'Earth - they have a nice example of data captured at Burning Man. This was one of a number of five minute lightning talks, which went over pretty well - several people told me that they enjoyed the format. I also gave one of those, on the topic of the past, present and future of the geospatial industry, and managed to fit into the allotted time - though next time I might choose a slightly more focused topic :) ! I will write up my talk in a separate post at some point (it will take a lot longer than 5 minutes to do that though!). Ed McNierny of Topozone had the most intriguing title, "No one wants 3/8 inch drill bits" - the punchline was that what they actually want is 3/8 inch holes, and we should focus on the results that our users need, not the specific tools they use to achieve those. Schuyler Erle gave one of the more entertaining presentations on 7-dimensional matrices that I have seen (and I say that as a mathematician).

Also in the opening session, Damian Conway gave a good talk entitled "geek eye for the suit guy", on how to sell "suits" on the benefits of open source software. Roughly half his arguments applied to geospatial software, and half were more specific to Linux - Adena has done a more detailed writeup.

Brady Forrest of O'Reilly Media gave an interesting presentation on "Trends of the Geo Web". His three main themes were "Maps, Maps Everywhere", "The Web as the GeoIndex", and

"Crowdsourced Data Collection". One interesting site he mentioned that I hadn't come across before was Walk Score, which ranks your home based on how "walkable" the neighborhood is (my loft in downtown Denver rated an excellent 94 out of 100). It seems as though every time I see a presentation like this I discover some interesting new sites, and now I listen slightly nervously hoping that I don't discover someone doing what we plan to do with Spatial Networking, but so far that hasn't happened!

I also was on the closing panel for the conference, which I thought went well - we had a pretty lively discussion. The closing session also included a preview of next year's conference which will be in Cape Town, South Africa. I had the pleasure of spending a few days in Cape Town in 2002, followed by a safari in Botswana which still ranks as the best of the many trips I've done to different parts of the world (check out my pictures). So I certainly hope to make it to the conference, and highly recommend that others try to make it down there and spend some additional time in that part of the world too.

Apologies to those I missed out of this somewhat rambling account, but the Sticky Wicket pub is calling, so I will wrap it up here, for now at least.

Monday, September 24, 2007

Oh the irony

And then I hear from Paul Ramsey on Friday and he says "we need your presentation in Open Office or PowerPoint format as we'll be presenting them all from the same PC". PowerPoint? PC? Aargghhh!!! Of all the places to force me to convert my beautiful Mac presentation back into a boring old PowerPoint to run on a PC, I didn't think it would be at an open source conference ;) !! Oh well, I'll have to save the launch of my Keynote pyrotechnics for another occasion :). To be fair, Paul has had a huge amount of work organizing all sorts of things for the conference so I can quite understand him wanting to simplify the presentation logistics ... but I figured I still needed to give him a bit of a hard time!

Anyway, it will force me to focus on content versus style. I am talking about the "past, present and future of the geospatial industry" in five minutes, so will be interesting to see if I get through it all before I'm thrown off :) !!

Friday, September 21, 2007

Ignacio Guerrero retires from Intergraph

Tuesday, September 18, 2007

Finally, the big decision on my next career move!

- No, don't do it! - 231 votes (49%)

- Yes, it is your destiny! - 177 votes (37%)

- Don't know / don't care / who is Peter Batty? - 66 votes (14%)

But after all that I have decided that I am going to go ahead and do my own startup, which I mentioned as a possibility in an earlier post looking for developers. I'm pleased to say that I got a number of responses to that post and have a couple of really good people signed up to start with, and a few others who might come on board as things move along. The new company is called Spatial Networking, and we will be focused on location related applications in the social networking space. I don't want to say too much more than that right now, but we will be looking to get our initial offering out in a few months, and I'll talk more about what we're up to as things move along. I'm excited about the opportunity in the space we're looking at, it should be a fun ride!

Incidentally, a couple of people asked me if I was looking for investors, and I will be at some point fairly soon - if you have any interest in finding out more when I get to that stage, feel free to email me.

Monday, September 17, 2007

GIS in the Rockies panel on Service Oriented Architecture (SOA)

The six panelists were asked to spend five minutes each answering the following question: Do you agree that service oriented architecture (SOA) is the key to enterprise data integration and interoperability? If so, how do you see geospatial technology evolving to support the concept of location services and data interoperability? If not, what is the alternative?

My short answer to the question was that SOA is one of various elements which can play a role in implementing integrated enterprise systems, but it is not THE key, just one of various aspects of solving the problem. The preceding panelists had given quite varied responses about different aspects of interoperability and SOA, which was perhaps indicative of the fact that SOA is a bit of a fuzzy notion with no universally agreed upon definition. The one constant in the responses was that 5 minute time limit was not well adhered to :) !!

So in my response, I first tried to clarify what SOA was from my point of view (based on various inputs), and then talked about other aspects of enterprise integration and interoperability.

SOA is really nothing radically new, just an evolution of notions of distributed computing and encapsulating functionality that have been around for a long time. In fact, a reader survey of Network Computing magazine ranked SOA as the most-despised tech buzzword of 2007! SOA is an approach to creating distributed computing systems, combining functionality from multiple different systems into something which appears to be one coherent application to a user.

CORBA and DCOM were both earlier attempts at distributed computing. The so-called "EAI" (Enterprise Application Integration) vendors like Tibco (who existed since since 1985 as part of Teknekron, since 1997 independently) and Vitria (since 1994) pioneered many of the important ideas for a long time before SOA became the buzzword de jour. More recently the big IT vendors have been getting involved in the space, like Oracle with their Fusion middleware and Microsoft with Biztalk

In general, most commentators seem to regard SOA as having two distinguishing characteristics from other distributed computing architectures:

- Services are fairly large chunks of functionality - not very granular

- Typically there is a common “orchestration” layer which lets you specify how services connect to each other (often without requiring programming)

Anyway, SOA certainly is a logical approach for various aspects of data integration with geospatial as with other kinds of data.

Now let's talk about some other issues apart from SOA which are important in enterprise integration and interoperability ...

Integration of front end user functionality

SOA is really focused on data integration (as the question said), but this is only a part of enterprise integration. Integration or embedding of functionality is important also, especially when you look at geospatial functionality - map display with common operations like panning, zooming, query, etc is a good example. There are various approaches to doing this, especially using browser-based applications, but still you need some consistency in client architecture approach across the organization, including integration with many legacy applications.

Standards

One key for really getting full benefits from SOA is standards. One of the key selling points is that you can replace components of your enterprise system without having to rewrite many custom interfaces - for example, you buy a new work management system and just rewrite one connector to the SOA, rather than 20 point to point interfaces. But this argument is even more compelling if there are standard interfaces which are implemented by multiple vendors in a given application space, so that custom integration work is minimized.

On the geospatial side, we have OGC standards and more recently geoRSS and KML, and there are efforts going on to harmonize these. The more “lightweight” standards have some strong momentum through the growth in non-traditional applications.

But in many ways the bigger question for enterprise integration is not about geospatial standards, it’s about standards for integrating major business systems, for example in a utility you have CIS (Customer Information System), work management, asset management, outage management, etc.

There have been various efforts to standardize these types of workflows in electric utilities - including multispeak for small utilities (co-ops), and the IEC TC57 Working Group 14 for larger utilities. Multispeak is probably further along in terms of adoption.

Database level integration

Database level integration is somewhat over-rated in certain respects, especially for doing updates - with any sort of application involving complex business logic, typically you need to do updates via some sort of API or service. But having data in a common database is useful in certain regards, especially for reporting. This could be reporting against operational data, or in a data warehouse / data mart.

Scalability

SOA approach largely (but not entirely) focused on 3 tier approach with thin client

Still for certain applications, especially heavy duty data creation and editing, a thick client approach is preferable for performance and scalability

Separation of geospatial data into a distinct “GIS” or “GIS database”

Enterprise systems involving geospatial data have typically had additional barriers to overcome because of technological limitations which required the geospatial data to be stored in its own separate "GIS database". Increasingly though these artificial barriers shouldn’t be necessary. All the major database management systems now support geospatial data types, there are increasingly easy and lightweight mechanisms for publishing that data (geoRSS and KML) ... so why shouldn’t location of customers be stored in the CIS, and location of assets be stored in the asset database, rather than implementing complex ways of linking objects in these systems back to locations stored in the “GIS” database? This is not just a technology question, we also need a change in mentality here - to spatial is not special, just another kind of data, a theme I have talked about before (and will talk about again!).

Summary

SOA is a good thing and helps with many aspects of enterprise integration, but it’s not a silver bullet - many other things are important in this area, including the following:

- Standards are key, covering whole business processes, not just geospatial aspects

- As geospatial data increasingly becomes just another data type, this will be a significant help in removing technological integration barriers which existed before - this really changes how we think about integration issues

- Integration of front end user functionality is important for geospatial, as well as data / back end service integration (the latter being where SOA is focused)

- There are still certain applications for which a “thick client” makes sense

Thursday, August 30, 2007

Mapping in the Arts event in Durango, Colorado

• maps inspire the artistic process

• mapping is used as a metaphor for discovery

• maps shaped the history of the West

• maps change the way we view the world

• mapping is a tool for envisioning a sustainable society

Wednesday, August 29, 2007

Insights from science fiction authors, good and bad

"... he has stopped looking forwards. 'The future is already here,' he is fond of suggesting. 'It is just not evenly distributed.' ... The problem, he suggests, is one of time and place: things, technologies, now happen too fast and in unpredictable locations."

A few things he said in the article which especially struck me as interesting were as follows:

- "These days, 'now' is wherever the new new thing is taking shape, and here is where you are logged on"

- "I'm really conscious, when I'm writing now, how Google-able the world is. You can no longer make up what some street in Moscow looks like because all your readers can have a look at it if they want to. That is an odd feeling."

- "I think we are getting to the point that a strange kind of relationship would be one where there was no virtual element. We are at that tipping point: how can you be friends with someone who is not online?"

By way of contrast to the thoughtful insights from William Gibson, a little while back someone forwarded me a link to this Google lecture by Bruce Sterling, which has to be perhaps the worst and almost certainly the most irritating presentation I have ever seen. I have a half-theory that the whole thing might be a spoof by the people who write "The Office" - it has the same cringe-worthy feel about it. Basically up front he says that he won't really talk about his ideas (about "spimes") since they're so widely published and he's sure everyone knows about them (though explaining them was supposed to be the subject of his talk). Instead he mainly talks about how visionary he is and repeatedly plugs his books. My favorite bit is right at the very end in the Q&A (yes, I did last that long, thinking that surely he must say something insightful at some point), where he asks whether anyone has read his book, and one person puts their hand up. Hint to presenters: if you're not going to talk about your ideas because you assume everyone knows about them, it may be a good idea to ask this question at the beginning of your presentation rather than the end! Perhaps Bruce has written some interesting things (he wrote an article in Wired recently which was okay), but I'm not inclined to rush out and see him speak on the basis of this showing.

Tuesday, August 28, 2007

Upcoming gigs

Peter Batty, Former Chief Technology Officer of Intergraph Corporation

Joseph K. Berry, Keck Scholar in Geosciences, University of Denver and Principal, Berry & Associates

Jack Dangermond, President, Environmental Systems Research Institute (ESRI)

William Gail, PhD, Director, Strategic Development - Microsoft Virtual Earth

Geoff Zeiss, Director of Technology, Infrastructure Solutions Division, Autodesk, Inc.

Andy Zetlan, National Director of Utility Industry Solutions, Oracle Corporation

GIS in the Rockies has always been one of the strongest regional GIS conferences, and I think they are anticipating 650+ people. There's a "social mixer" right after the panel, which hopefully most of the panelists will attend, at the Wynkoop Brewing Company in Denver, which I happen to live above, so I will definitely be there!

The second event is FOSS4G, in Victoria, Canada, where I'm giving a 5 minute lightning talk in the open session on "The past, present and future of the geospatial industry" - I felt like a bit of a challenge :) !! I'm looking forward to that actually, having never done a 5 minute presentation before - it will be interesting to figure out what to say. And I'm also going to be on the closing panel, with Tim Bowden of Mapforge Geospatial, Mark Sondheim of BC Integrated Land Management Bureau, and Frank Warmerdam, President of the Open Source Geospatial Foundation (OSGeo). It will be my first FOSS4G and I'm looking forward to meeting a different crowd and getting some new perspectives on things.

Monday, August 27, 2007

More on "is geospatial special"?

I thought I would just do a short follow up on my earlier post on GeoWeb, which generated a few comments and emails. "Mentaer" made the following comment:

I see almost once a month why geo-data are special and deserve some special treatment - even if I talk to IT people. I am thinking for instance on geographic projections, geo-ontologies (i.e. fuzzy terms and objects), and spatial indices for databases... ending up with spatial web searches such as "lakes near Zurich". So... "geo" is special from my point of view, at least as much as medical databases and images? ;) (otherwise a whole bunch of GIScientists makes unnecessary research?)

I thought I would pick up on this one. Of course there are specialized areas of work with regard to geospatial data, just as there are with numbers, but the point is that 99% of people who can do useful work with geospatial data don't need to worry about these things. I thought that the statement that geospatial data was at least as special as images was a good parallel actually. You can do extremely complex work with images, of course. But you also find images on almost every web page you access, everyone reading this blog has devices for creating image data (aka cameras), many people have a phone which can create image data, many of you will share this image data that you create with your friends using online services. So I see a lot of parallels here with geospatial data - images are a little further ahead in terms of being completely pervasive, but not by much.

Tuesday, August 7, 2007

Looking for some top notch web developers

So I'm looking around for a couple of top notch web developers who might be interested in getting involved in this. Preferably looking for people in the Denver area, but working remotely might be an option for the right person. Desirable experience / skills include the following (I'm not expecting all of these in one person):

- Ruby on Rails or Python (platform still TBD but these are probably the front runners)

- Building large scalable database applications

- Building large scalable web applications - experience with Amazon S3 and EC2 would be a plus

- AJAX

- Great user interface design and development

- PostgreSQL and/or PostGIS (though again, platform still TBD)

- Development of social networking applications (including the Facebook Platform)

- Experience with online mapping development (Google, Microsoft, etc) a plus but less important than other things on the list

If you have any interest, please get in touch! And again, there is the caveat that this may or may not happen - but finding the right people would be a big vote in favor of going this way. I think this is an opportunity to develop something very cool, have a lot of fun and make some good money (down the line)!

Apple iWeb to support Google Maps

The main subject of the Apple announcements was some new iMacs - which I was quite pleased about, as I've decided to get an iMac to manage my photos, having been impressed with both Aperture and iPhoto on my MacBook, and I'd heard rumors that they were about to launch a new line so held off. Will try out the Google Maps and iWeb stuff when I get one (they're supposed to be available immediately but are not on the Apple web site at the time of writing this).

The main subject of the Apple announcements was some new iMacs - which I was quite pleased about, as I've decided to get an iMac to manage my photos, having been impressed with both Aperture and iPhoto on my MacBook, and I'd heard rumors that they were about to launch a new line so held off. Will try out the Google Maps and iWeb stuff when I get one (they're supposed to be available immediately but are not on the Apple web site at the time of writing this).

Tuesday, July 31, 2007

MapJack

One of most interesting aspects of this for me is in the "About MapJack" page, where they say:

Mapjack.com showcases a new level of mapping technology. What others have done with NASA budgets and Star Wars-like equipment, we've done on a shoestring budget, along with a few trips to Radio Shack. Specifically, we developed an array of proprietary electronics, hardware and software tools that enable us to capture an entire city’s streets with relative ease and excellent image quality. We have a complete low-cost scalable system encompassing the entire work-flow process needed for Immersive Street-Side Imagery, from picture gathering to post-processing to assembling on a Website.

This is just another example of people finding ways to bring down the cost of relatively specialized and expensive data capture tasks - it made me think of this post on aerial photography by Ed Parsons.

GeoWeb report - part 1

But anyway, the GeoWeb conference was very good. The venue was excellent, especially the room where the plenary sessions were held, which was "in the round", with microphones, power and network connections for all attendees (it felt a bit like being at the United Nations). This was very good for encouraging audience interaction, even with a fairly large group. See the picture below of the closing panel featuring Michael Jones of Google, Vincent Tao of Microsoft, Ron Lake of Galdos, and me (of no fixed abode).

I will do a couple more posts as I work through my notes, but here are a few of the highlights. In his introductory comments, Ron Lake said that in past years the focus of the conference had primarily been on what the web could bring to "geo", but that now we were also seeing increasing discussion on what "geo" can bring to the web - I thought that this was a good and succinct observation.

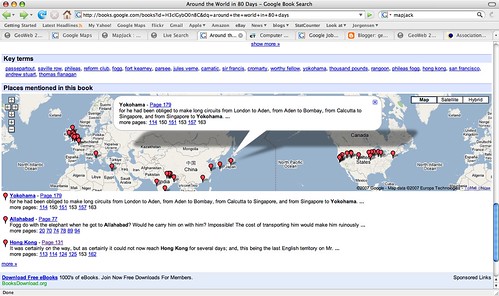

Perhaps one of the best examples of the latter was given by Michael Jones in his keynote, where he showed a very interesting example from Google book search, which I hadn't come across before. If you do a book search for Around the World in 80 Days, and scroll down to the bottom of the screen, you will see a map with markers showing all the places mentioned in the book. When you click on a marker, you get a list of of the pages where this place is mentioned and in some cases can click through to that page.

This adds a powerful spatial dimension to a traditional text-based document. It is not much of a jump to think about incorporating this spatial dimension into the book search capability, and if you can do this on books, why not all documents indexed by Google? Michael said that he expected to see the "modality" of spatial browsing grow significantly in the next year, and he was originally going to show us a different non-public example in regard to this topic, but he couldn't as he had a problem connecting to the Google VPN. My interpretation of all this is that I think we will see some announcements from Google in the not too distant future that combine their traditional search with geospatial capabilities (of course people like MetaCarta have been doing similar things for a while, but as we have seen with Earth and Maps, if Google does it then things take on a whole new dimension).

Another item of interest that Michael mentioned is that Google is close to reaching an arrangement with the BC (British Columbia) government to publish a variety of their geospatial data via Google Earth and Maps. This was covered in an article in the Vancouver Sun, which has been referenced by various other blogs in the past couple of days (including AnyGeo, The Map Room, and All Points Blog). This could be a very significant development if other government agencies follow suit, which would make a lot of sense - it's a great way for government entities to serve their citizens, by making their data easily available through Google (or Microsoft, or whoever - this is not an exclusive arrangement with Google). There are a few other interesting things Michael mentioned which I'll save for another post.

One other theme which came up quite a lot during the conference was "traditional" geospatial data creation and update versus "user generated" data ("the crowd", "Web 2.0", etc). Several times people commented that we had attendees from two different worlds at the conference, the traditional GIS world and the "neogeography" world, and although events like this are helping to bring the two somewhat closer together, people from the two worlds tend to have differing views on topics like data update. Google's move with BC is one interesting step in bringing these together. Ron Lake also gave a good presentation with some interesting ideas on data update processes which could accommodate elements of both worlds. Important concepts here included the notions of features and observations, and of custodians, observers and subscribers. I may return to this topic in a future post.

As anticipated given the speakers, there were some good keynotes. Vint Cerf, vice president and chief Internet evangelist for Google, and widely known as a "Father of the Internet", kicked things off with an interesting presentation which talked about key architectural principles which he felt had contributed to the success of the Internet, and some thoughts on how some of these might apply to the "GeoWeb" - though as he said, he hadn't had a chance to spend too much time looking specifically at the geospatial area. I will do a separate post on that.

He was followed by Jack Dangermond, who talked on his current theme of "The Geographic Approach" - his presentation was basically a subset of the one he did at the recent ESRI user conference. He was passionate and articulate as always about all that geospatial technology can do for the world. A difference in emphasis between him and speakers from "the other world" is in the focus on the role of "GIS" and "GIS professionals". I agree that there will continue to be a lot of specialized tasks that will need to be done by "GIS professionals" - but what many of the "old guard" still don't realize, or don't want to accept, is that the great majority of useful work that is done with geospatial data will be done by people who are not geospatial professionals and do not have access to "traditional GIS" software. To extend an analogy I've used before, most useful work with numerical data is not done by mathematicians. This is not scary or bad or a knock on mathematicians (I happen to be one by the way), but it does mean that as a society we can leverage the power of numerical information by orders of magnitude more than we could if only a small elite clique of "certified mathematical professionals" were allowed to work with numbers. Substitute "geographical" or "geospatial" as appropriate in this statement to translate this to the current situation in our industry.

For example, one slide in Jack's presentation has the title "GIS servers manage geographic data". This is a true statement, but much more important is that fact that we are now in a world where ANY server can manage geographic data - formats like geoRSS and KML enable this, together with the fact that all the major database management systems are providing support for spatial data. There is a widely stated "fact" that many people in the geospatial industry have quoted over the years, that something like 85% of data has a geospatial component (I have never seen a source for this claim though - has anyone else?). Whatever the actual number, it certainly seems reasonable to claim that "most" data has a spatial component. So does that mean that 85% of data needs to be stored in special "GIS servers"? Of course not, that is why it is so significant that we really are crossing the threshold to where geospatial data is just another data type, which can be handled by a wide range of information systems, so we can just add that spatial component into existing data where it currently is. Jack also continues to label Google and Microsoft as "consumer" systems when, as I've said before, they are clearly much more than that already, and their role in non-consumer applications will continue to increase rapidly.

But anyway, as Ron said in his introduction, it would be hard to get two better qualified people than Jack and Vint to talk about some of the key concepts of "geo" and "web", so it was an excellent opening session. I think that this post is more than long enough by this point, so I'll wrap it up here and save further ramblings for part 2!

Thursday, July 26, 2007

Microsoft Virtual Earth to support KML

This would make a huge amount of sense of course, given the amount of data which is being made available in KML, but nevertheless Microsoft does have something of a track record of trying to impose their own standards :), and they have been reluctant to commit to KML up to this point, so I think this is a very welcome announcement (assuming it's correct), which can only cement KML's position as a de facto standard (I don't think Microsoft could have stopped KML's momentum, but if they had released a competing format it would have been an unfortunate distraction).

Tuesday, July 24, 2007

Sad news about Larry Engelken

Friday, July 20, 2007

GeoWeb panel update

This closing panel session features senior visionaries who provide a synthetic take of GeoWeb 2007 and use this as a basis for forecasting the growth, evolution, and direction of the GeoWeb. Specifically, discussants will address:

What will it look like in 2012?

What device(s) will predominate?

What will be the greatest innovation?

What will be the largest impediment?

What market segments will it dominate?

What market segments will it fail to impact?

Each discussant will provide a five- to seven-minute statement touching on each of the questions above. A 30-minute question-and-answer session will follow. Answers will be limited to two minutes; each discussant has the opportunity to respond to each question.

Since I leave tomorrow morning to drive up there, I will have something to think about on the road! It should be a good panel I think.

Tuesday, July 17, 2007

Back from Mexico - some experiences with geotagging

This was my first vacation where I managed to reliably geotag all my photos - previously I had made a few half-hearted attempts but had failed to charge enough batteries to keep my GPS going consistently, or hadn't taken it everywhere. I used HoudahGeo to geotag the photos on my Mac, and this seemed to work fine, with a couple of minor complaints. One is that when it writes lat-long data to the EXIF metadata in the original images, for some reason iPhoto doesn't realize this, and doesn't show the lat-long when you ask for info on the photo (unless you export and re-import all the photos, in which case it does). The other is that most operations are fast, but for some reason writing the EXIF data to the photos, the final step in the workflow I use, is very slow - it would often take several minutes to do this for several hundred 10 megapixel images (a typical day's shooting for me), when the previous operations had taken a few seconds. But it did the job and all my photos have the lat-long where they were taken safely tucked away in their EXIF metadata.

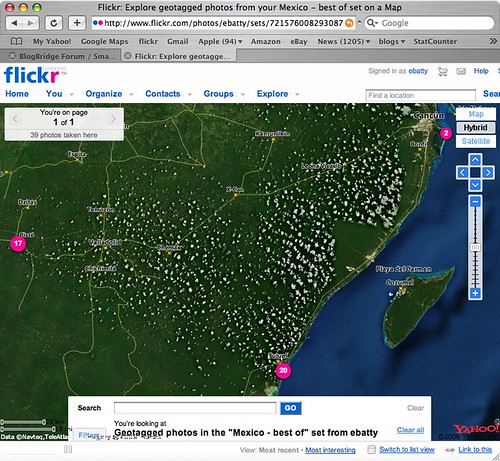

Flickr provides its own map viewing capability, which uses Yahoo! maps (not surprisingly since Yahoo! owns flickr). If you click here, you can see the live version of the map which is also shown in the following screen shot, showing the locations of my "top 40" pictures from the trip.

If you zoom in to the northeast location, there is some high quality imagery, in Cancun, which gives a good basis for seeing where the photos were taken. However, the imagery is very poor in the two main locations where we stayed, Tulum (to the south) and Chichen Itza (to the west). I looked in Google Earth and Google Maps, and they both had much better imagery in Tulum, though unfortunately not in Chichen Itza. Microsoft didn't have good imagery for either location. So I thought I should poke around a little more to explore the latest options for displaying geotagged photos from flickr in Google Earth or Google Maps.

Flickr actually has some nice native support now for generating feeds of geotagged photos in either KML or GeoRSS. So this link dynamically generates a KML file of my photos with the tags mexico and best (though both Safari and FireFox don't seem to be able to recognize this as a KML file automatically, I have to tell them to use Google Earth and then it works fine). Unfortunately though, flickr feeds seem to return a maximum of 20 photos, and I haven't found any way around this. I can work around this by creating separate feeds for the best photos from Tulum, Chichen Itza and Cancun separately, but obviously that's not a good solution in all cases. These KML files work well in Google Earth, and one nice feature is that they include thumbnail versions of each photo which are directly displayed on the map (and when you click on those, you get a larger version displayed and the option to click again and display a full size photo back at flickr). However, the approach of using thumbnails does obscure the map more than if you use pins or other symbols to show the location of the photo - either approach may be preferred depending on the situation. These files don't display especially well in Google Maps - you get the icon, but the info window doesn't include the larger image or the links back to flickr - here's one example.

I looked around a little more and found this post at Ogle Earth which pointed me to this Yahoo Pipe which can be used to create a KML file from flickr. After a bit more messing around (you have to find things like your internal flickr id, which is non-obvious), I managed to produce this KML file which contains all of my "top 40" photos in a single file (you may need to right click the link and save the KML file, then open it in Google Earth). Of course I also needed to upload this file to somewhere accessible on the web, so all in all this involved quite a few steps. This KML file uses pins displayed on the map (with photo titles), rather than thumbnails, and again the info window displays a small version of the photo with an option to click a link back to flickr for larger versions. This KML also includes time stamps, which is interesting - if you are using Google Earth 4, you will see a "timer" bar at the top of the window when you select this layer. To see all the images, make sure you have the whole time window selected (at first this was not the case for me, so it seemed that some of the photos were missing). But if you select a smaller window, you can do an animation to show where the pictures were taken over time, which is also pretty cool.

So in general conclusion, the tools to easily geotag your photos are pretty solid now - I have used both HoudahGeo on the Mac, and previously RoboGeo on the PC, and both worked well. The software available to display geotagged photos is getting better, but there's still room for improvement - but I'm sure things will continue to move along quickly in this area. I would like to see flickr add a "KML" button to their map displays, which would be much simpler than the current process!

Thursday, July 5, 2007

Off on vacation for a week

However, I did just buy myself a new Garmin 60CSx handheld GPS (since I still can't get any software I'm happy with to save tracklogs on the BlackBerry), and plan to test out the HoudaGeo application I just bought for the Mac to geocode all my photos (we will be going to the Mayan pyramids at Chichen Izta too, which I'm really looking forward to). I did a quick test of the HoudaGeo application in Denver this afternoon and it seemed to work fine (I had previously used RoboGeo on the PC, but that doesn't work on the Mac).

However, I did just buy myself a new Garmin 60CSx handheld GPS (since I still can't get any software I'm happy with to save tracklogs on the BlackBerry), and plan to test out the HoudaGeo application I just bought for the Mac to geocode all my photos (we will be going to the Mayan pyramids at Chichen Izta too, which I'm really looking forward to). I did a quick test of the HoudaGeo application in Denver this afternoon and it seemed to work fine (I had previously used RoboGeo on the PC, but that doesn't work on the Mac).Cheers for now :).

iPhone after almost a week's experience

A few high points:

- The general user experience is great

- Web browsing - especially once you get used to the user interface and learn a few tricks, it's amazing how usable this is on a device with such a small screen. At first I was mainly zooming using the "pinch" technique, but on watching the "iPhone tour" video again I realized that double-clicking zooms you in to the specific area of the page where you double click (so in a page with multiple columns, it will set the display to the width of the column you click on). Though one drawback I hadn't thought about until Dave Stewart from the Microsoft Virtual Earth team mentioned it is that safari intercepts all double click and mouse move events in order to bring you this functionality, which is an issue for many browser based applications (especially mapping ones).

- Google maps - my concerns about the local search implementation notwithstanding, it's still very useful and fun in a lot of situations. I was just in bar talking to a friend about my upcoming drive to Vancouver, and he said that he thought that the drive from Vancouver to Whistler was one of the most spectacular that he had done - but we got into a discussion about whether you could just drive on from Whistler to Jasper or would have to backtrack, and in a minute or so Google Maps on the iPhone had resolved the question for us (you can just continue on, so this is added into my plans). And at lunch today I was in a coffee shop and decided to search for local restaurants and look at their web sites, to see how easily I could check out their menus and decide where I'd like to go - this did work pretty well, as each search result included a link to the restaurant web site (and no bogus results were returned in this case)

- The Photo, iPod and Youtube applications are all great

- WiFi support - so far I have been using this most of the time, and get great performance and don't use up minutes on a plan, etc. This will be especially useful when traveling abroad, as the mobile phone companies all really burn you on charging for data over the cell phone networks, so being able to use WiFi will be great.

- It's a chick magnet at parties (my girlfriend Paula's description, not mine!)

- Add a GPS, of course

- Improve local search, as I discussed previously

- While the general user experience is great, there are a lot of areas where they could leverage some of the new techniques but didn't. Automatic rotation of applications into landscape mode is one area I would like to see leveraged a lot more. This is used to good effect in the web browser, which I almost always use in landscape mode as things are much more legible. And it is great in the photo and iPod applications (see the video). But when I'm in mail and I get an HTML formatted email, this is just like looking at a web page and would be far superior in landscape mode, yet this is not supported. As I mentioned previously, maps does not support this when it would be such a natural thing to do. And also, the keyboard is much wider with larger keys when in landscape mode, so it would be great to leverage this in situations where the focus is on data entry - for example in the notes application, or when composing an email, both of which only work in portrait mode. And there are some odd inconsistencies - both photos and maps support the notion of panning and zooming, and both support the same pinching technique for zooming in and out, and dragging with your finger to pan. But in maps, you double tap to zoom in and tap with two fingers to zoom out, whereas in photos, double click will either zoom in or zoom out depending on the situation (which is the same as in the browser). Maps also needs to support the typing auto-correction - it seems to be the only application which doesn't. These sort of things should really be ironed out.